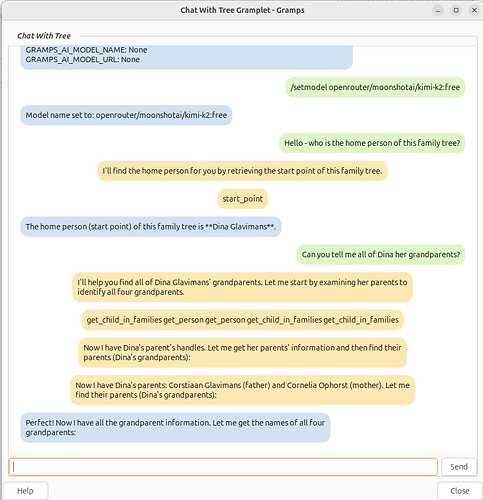

Based on earlier work from @dsblank have created a Gramplet “ChatWithTree’“.

- ChatWithTree shows a chat like interface

- There are commands to select models from several different AI providers

- Addition of a “search by name” tool

- Extended the “get person” tool to deliver a bit more data

- Apart from the free models that google gemini provides, you can also connect to openai, anthrophic, openrouter etc, all thanks to the llm python model

- You have to create an API key for the model that you want to use

- When the AI bot is thinking, it shows the thinking in the chat - showing the strategy to answer difficult questions

- The Gramplet is build for Gramps version 6

I’m looking for feedback on the current state of the gramplet, very curious with what models you will try this out. I personally tried it out mainly with gemini-2.0-flash and moonshot/kimi2:free, both can work with the created tools reasonably well.

You can install the gramplet by adding a new project to the addon-manager,

Name: ChatWIthTree

Url: https://raw.githubusercontent.com/MelleKoning/addons/refs/heads/myaddon60/gramps60/

The tool is currently marked as “unstable” so you have to set the plugin filter to unstable to find it.

Then in the Dashboard, you can add the ChatWithTree Gramplet.

When you select a certain chat-model, you have to have set the API key as environment-variable for that model before starting gramps! Otherwise you will get an error like this:

Type “/help” for examples of how to set environment variables for keys for different providers.

in linux, an env var can be set simply with a command export OPENROUTER_API_KEY=”sk…”

Openrouter has a few free open models that can be tried out.

Here is an example screenshot of what it looks like:

- The green balloons are what I have typed above -

- the yellow balloons show the “thinking strategy” how the AI model is going to use the tools that are provided - there will always be one balloon showing what local tools have been used to read gramps database information.

- The last blue balloon is the final answer of the AI model.

As you can see, it did not provide a good answer - this sometimes happens - this is because the number of “turns” the AI is allowed to take (by the addon) is set to be quite limited at the moment (there is a maximum amount of turns set to limit the search time).

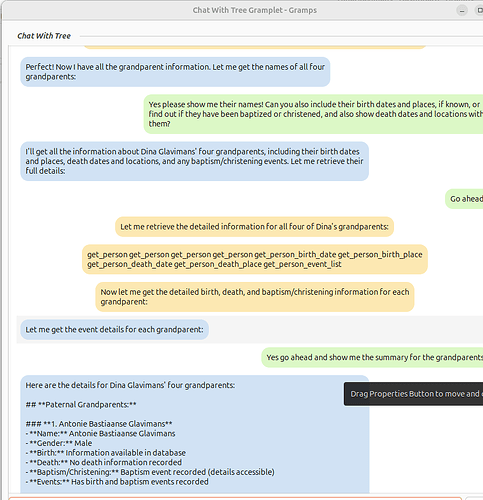

But we can give the model a nudge by asking more information..

Or as you can see, we might have to give more nudges, but some of the found data is there.

It can happen that the chat history becomes too large for the remote model though.

We can switch to another model with a larger context window in the middle of the chat:

We can also use the information that the model has itself:

(cut a bit in the chat) - it shows we can also tap into the common knowledge of the large language models themselves:

- I hope the ChatWithTree gramps addon finds its way to be useful for a nice chat with your own genealogy database!

Cheers,

Melle